How a Node.js Memory Leak Took Down Our Next.js App —and What We Learned

A regression in Node.js 20.16.0 introduced a memory leak in the built‑in Fetch API (via undici). After our hosting platform automatically upgraded the runtime, our production Next.js containers started crashing with out‑of‑memory (OOM) exceptions. We mitigated the issue by pinning Node to 20.15.1 and later upgraded safely to 22.7.0, which includes the upstream fix.

The Symptom: Sudden OOM Crashes

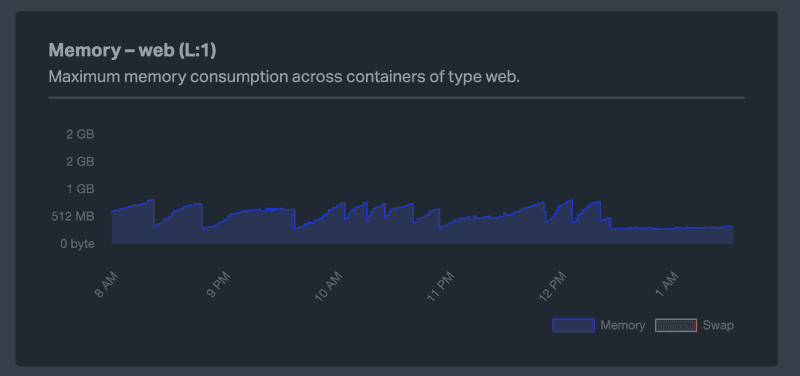

A routine deploy was followed by a flood of alerts: every pod restarted within minutes, each marked OOMKilled. Metrics showed a characteristic saw‑tooth memory profile—an unmistakable sign of a leak.

First Instinct: Audit Our Own Code

We spent the first couple of hours profiling allocations, searching for long‑lived objects and event‑listener leaks. When even an empty endpoint reproduced the behaviour, we realised the culprit lay outside our codebase.

The Culprit: Node.js 20.16.0

A GitHub thread titled “Possible memory leak in Fetch API” pointed us to a Node.js issue confirming the leak in v20.16.0. The root cause was a regression in undici, the HTTP client behind the Fetch API. The patch landed in undici v6.16.0 and shipped with Node.js 22.7.0.

Immediate Mitigation: Pin the Runtime Version

Our package.json originally allowed any 20.x release:

{

"engines": {

"node": ">=20.0.0"

}

}Because the platform picks the highest compatible version, our containers silently switched to 20.16.0—the first version with the leak. We rolled back by explicitly pinning 20.15.1:

{

"engines": {

"node": "=20.15.1"

}

}Memory usage stabilised immediately, and crashes stopped.

Permanent Fix: Upgrade to Node.js 22.7.0

Once 22.7.0 was released with the patch, we upgraded and locked that exact version:

{

"engines": {

"node": "=22.7.0"

}

}Lessons Learned

- Pin critical runtime versions. Allowing the platform to auto‑upgrade can introduce breaking changes without a single code commit.

- Watch trends, not just thresholds. A steadily climbing memory graph is often the first sign of a leak.

- Stay close to upstream conversations. GitHub issues and release notes shortened our incident response from days to hours.